Learning AI Poorly: Cloning Voices. What the?

(originally posted to LinkedIn)

I feel like most of us can agree that AI is nuts. This week, AI voice cloning wins as the crazy application of AI that I want to talk about. Let’s take a shallow dive and figure out how it works.

Text to Speech #

If you read my article about Convolutional Neural Networks (CNN’s) and how they are used to make my cheap guitar sound like a super rare, boutique rock machine you’ll know that they work by training a model on two audio streams that are playing the exact same thing. The first stream is my cheap guitar playing Hot Cross Buns and the second is the super rare awesome thing playing the exact same song but, of course, sounding fantastic. The neural network is trained on that type of signal enough so that it can take new, distinct cheap sounding input and “infer” what the output would sound like using the boutique instrument. Now I can play “Sweet Child o’ Mine” and sound exactly like Slash.

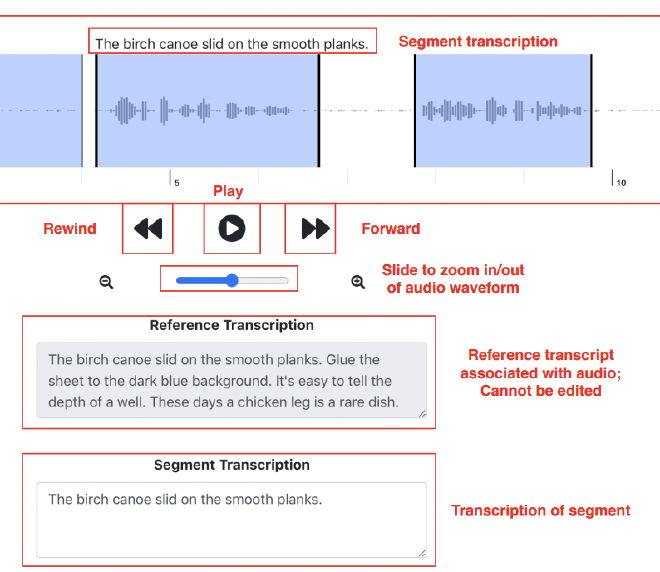

Text to speech does pretty much the exact same thing except the input is literally text, and the output is someone saying the words. To train, someone (or a computer program) will listen to an audio file and write down every single word spoken and the timestamp of each word. Imagine Tom Hanks reading an audio book. All you’d have to do is line up each word in the book with the sound from the audio book and you’ve got a training data set. Train a CNN using that data to get a model. Now, give that model a new sentence and let it “infer” the audio output. If all goes well, it will sound like Tom Hanks.

To be honest, that isn’t very exciting. I mean, it is neat, but if you understand how neural networks do their thing (input is numbers, the network spits out other numbers that, in this case, is an audio file.) yay. But then you run into something like:

How the hell does that magic work?

Retrieval-based-Voice-Conversion and SO-VITS-SVC #

As you can imagine, getting an AI model to sing a song as a different artist takes a lot of steps. It started with the paper A COMPARISON OF DISCRETE AND SOFT SPEECH UNITS FOR IMPROVED VOICE CONVERSION which is an architecture that is able to transform one voice into another without changing the content. It does this by having:

- Two content encoders. One creates a set of distinct speech units but traditionally these lead to mispronunciations so they created a second one that predicts speech units to help get the right pronunciation.

- An Acoustic Model that transforms the discreet speech units into a target spectogram (how frequencies vary over time)

- A vocoder (speech synthesizer) that converts the spectrogram into an audio waveform.

And then the paper Conditional Variational Autoencoder with Adversarial Learning for End-to-End Text-to-Speech which describes a thing called VITS which is a text to speech model that generates more natural sounding audio:

Our method adopts variational inference augmented with normalizing flows and an adversarial training process, which improves the expressive power of generative modeling. We also propose a stochastic duration predictor to synthesize speech with diverse rhythms from input text. With the uncertainty modeling over latent variables and the stochastic duration predictor, our method expresses the natural one-to-many relationship in which a text input can be spoken in multiple ways with different pitches and rhythms.

So, what happened is people combined these methods into a thing called SO-VITS-SVC which enabled real time voice conversion, faster training, a graphical user interface and a command line interface so people could more easily use it.

It is able to clone voices quickly because it takes the original voice and compares its sound to an array of pre-trained models to pick the model that most closely resembles it. It uses that model to generate the new voice and in the last, vocoder step, it adds some processing to make up the difference between the voice you want and the model that was used.

SO-VITS-SVC works really well, but a newer thing called Retrieval-based-Voice-Conversion (RVC) which apparently sounds better but I cannot find a paper or anything that helps me understand why. Only thing I found was this stackoverflow question that says RVC:

used ContentVec as the content encoder rather than HuBERT. ContentVec is an improved version of HuBERT, and it can ignore speaker information and only focus on content. Secondly, the RVC used top1 retrieval to reduce tone leakage. It is just like the codebook used in VQ-VAE, mapping the unseen input into known input in the training dataset.

So.. it uses a better content encoder and when the model is looking for a spectograph it chooses a better one? Not sure… Must be magic.