Learning AI the Hard Way: Why I Sold My 100 Watt Marshall Stack for a Neural Network

(originally posted on LinkedIn)

AI and Machine Learning for audio is fascinating, and, much like text, it seems magical… Until you dig into the details and realize, much like ChatGPT, audio ML applications just take in list of integers and output another list of integers. Now… it is a really really long list… but a list, nonetheless.

There are machines called deep Convolutional Neural Networks (CNN) that are really good at synthesizing audio that are used in text-to-speech and modifying streams of audio data in novel and interesting ways.

That guitar amp pictured above is a machine that uses vacuum tubes and analog circuitry to modify the audio signal coming out of a guitar to make it sound like rock… Its job is to take a plain, acoustic guitar signal that sounds like a dork in a college dorm playing Wonderwall and modify it into the face-melting tone of Jimi Hendrix or Slash. Surprisingly, a CNN is a machine that can do the exact same thing.

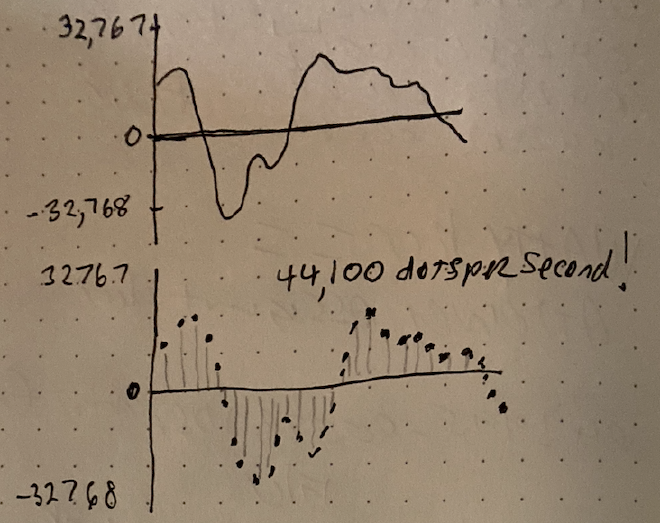

How does that work? Well… computers are not analog, they are digital which means everything is ones and zeros and, in most cases those ones and zeros are packed together to represent numbers. To record audio, they use an Analog to Digital Converter (ADC) that takes a signal, or an audio wave and turns it into numbers. To do that, it looks at the signal every 1/xth of a second and writes the value into a list. Like this:

In that picture, we are sampling the analog audio signal (top) at 16 bits, meaning we pick values between -32,768 and 32,767 and we do that 44,100 times every second. That gives us a very long list of numbers…. When we graph that list of numbers (bottom) it pretty much looks like the analog signal. In fact, if we feed that list of numbers to a Digital to Analog Converter (DAC) we will output an analog signal that pretty much sounds exactly like what went in.

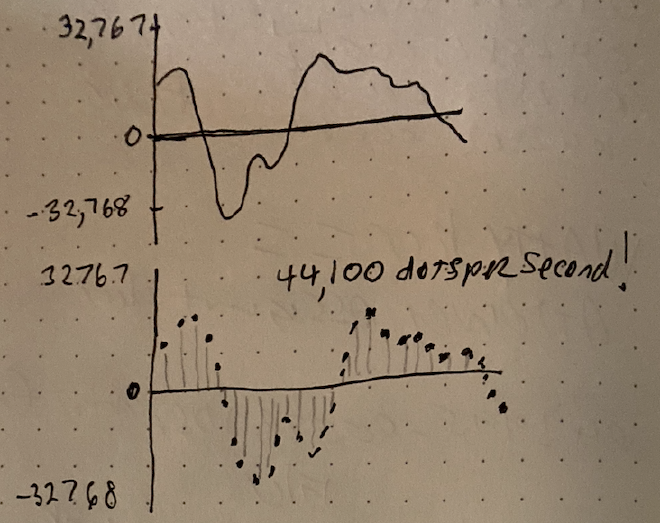

Ok, so what does that 100 Watt Marshall amp do? It modifies the signal by adding distortion, compression, clipping, etc. If we were to graph it, it might make the original signal (top) into something that looks like the bottom signal:

What if we train a neural network on those two signals (input and output) so that it “learns” to produce the output list of numbers given the input? Then, if we give it a different list of numbers (input signal) it can generate an output that would be very similar to what the real, analog amp would create. Can we do that? Sure, why the hell not.

Two of my favorite flavors of neural networks that are good for this are called WaveNet and LSTM You can set these networks up and train them on real data (they literally use WAV files which are uncompressed lists of numbers).

Training means you record the input going into the amp (your raw guitar signal) and also record the face-melting audio coming out of the amplifier. Now you have two different WAV files that you use to train the model. Training adjusts its parameters so that it is able to generate something very close to the real output given the input.

Once the model is trained well enough, you can use the it for “inference” which means when you give it a new list of numbers it has never seen before it will generate a list of output numbers that should sound a lot like what the real amplifier would produce.

Does it work? Yes it does! Surprisingly well. There are a lot of open source and actual products for sale that use neural networks that make you sound like you’re using real, analog, boutique, and rare gear. Communities of people use these projects to make models of real gear that they own then share them with others.

In some ways, it has made it no longer necessary to collect and maintain these super rare, expensive, and quirky tube amps and analog guitar pedals to get those face melting tones. You can just open up a model of a $300,000 Dumble Overdrive Special and play it like you own it. It is kind of spectacular.

If you’re interested in learning more, google “Neural Amp Modeler (NAM)” which uses WaveNet. GuitarML’s projects (that use LSTM models) and check out youtube demos. There are even github pages:

Did I really sell my Marshall stack? Yes… yes I did. However! While there is nothing magical about AI and ML, there is something magical about plugging into an amp that generates 100 watts of pure, vintage, vacuum tube, ear bleeding power that pushes 50 year old hand wound speakers into stomping out so much air you can feel it in your chest. There isn’t an AI that can do anything like that… yet.