Learning AI Poorly: Mixture of Experts (MoE)

(originally posted to LinkedIn)

We all know that the more parameters a large language model has, the better it performs. For example, Llama 2 70B has 70 billion and works well. GPT 3 has 175 billion parameters and it works great. GPT 4 has 1.7 trillion and works even better.

A company called Mixtral has created a model that uses a different approach than those. It only has 46 billion parameters and when it runs, only about 13 billion of those are used. So, it is lean and fast and it outperforms or matches Llama 2 70B and GPT-3.5 on benchmarks. The model is called Mixtral-8x7B and the approach or architecture is called “Mixture of Experts” or MoE for short. They published a paper about it on 08 Jan. How does it work?

The idea (at a super high level) is to train several distinct and lightweight neural networks on subsets of data so that each network becomes sort of an “expert” in that area. You can imagine that maybe one network is really good at generating code while another is great at generating recipes and another just happens to be good at answering physics questions. Mixtral-8x7B has 8 of these “expert” blocks. When you query the MoE, the most likely “expert” for the query is calculated and then the output from that expert is given as the final result.

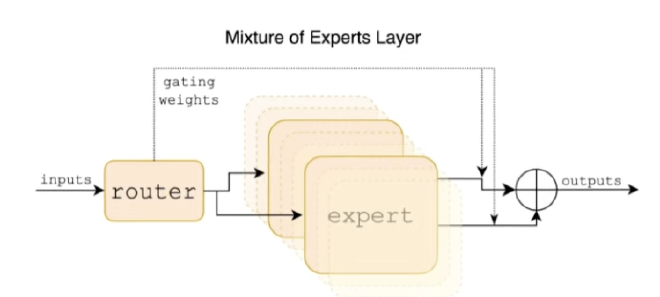

The thing that selects an expert is called a “router.” It too is a neural network that is trained to sort of know which expert is most likely to give the best answer. Something like this:

I know a second ago I implied that each “expert” is trained to a single domain, but… I lied. Totally not true. Since the router is a neural network with trained parameters, it only knows about the tokens it sees during training. And, since this is not magic, it isn’t like the router “knows” what a recipe is or a physics problem. So, the “experts” are maybe better characterized as “really good at producing the right output given a sequence of tokens.” In fact, the paper says:

Surprisingly, we do not observe obvious patterns in the assignment of experts based on the topic. For instance, at all layers, the distribution of expert assignment is very similar for ArXiv papers (written in Latex), for biology (PubMed Abstracts), and for Philosophy (PhilPapers) documents. Only for DM Mathematics we note a marginally different distribution of experts. This divergence is likely a consequence of the dataset’s synthetic nature and its limited coverage of the natural language spectrum, and is particularly noticeable at the first and last layers, where the hidden states are very correlated to the input and output embeddings respectively.

So, it isn’t like you could run a single expert by itself and have an amazing helper in the kitchen. You still need the entire model to get good results.

The reason why this model is exciting is the fact that it is smaller than others, performs equally as well, and it is extremely efficient because only the parameters that need to be computed are and others are left dark. Unlike traditional LLM’s where the entire thing needs to light up to get a result.

Exciting!