Computer Vision Using a Thing Called OpenCV

(originally posted on LinkedIn)

It seems like Large Language Models (LLM’s) with their magical chat bots and what not have dominated the AI/ML news for long enough to feel… I don’t know… Boring?

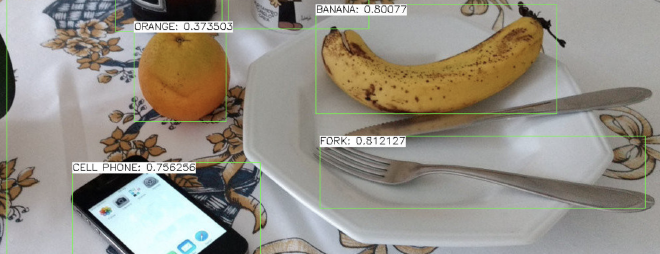

Did you ever wonder how a self-driving car can avoid a school bus full of kittens or navigate through a busy parking lot? Ever see an image like the one above and wonder how the computer can draw a box around a banana and know what it is? I had exactly those questions a long time ago which led me down the path of statistical learning using things like Bayes methods and Logistic Regression. It was a great intro to AI and Machine Learning and has almost nothing to do with ChatGPT.

Exciting!

Computer Vision is an old field of work that started out in the 1960’s with a desire to hook a camera up to a computer and have it describe what it saw. Easier said than done, the field slowly progressed by inventing techniques to extract 3D data from pictures. Things like “edge detection” led to things like “Image Segmentation” which is a process to slice an image up into smaller regions by identifying the boundaries of objects and then labeling them (like with the word, banana.)

Fast forwarding to today, modern hardware and machine learning techniques allow us to do these things almost real time on video. So, when someone tosses a banana across the frame you can get a fun little box that tracks it that might say something like “flying banana.” Neat.

OpenCV - The Library #

If you’d like to get started on a project to identify flying bananas, you could start from scratch and spend years trying to get it to work, or you could use a thing called OpenCV (open computer vision.) OpenCV is the most popular (as far as I can tell) library for building imaging applications quickly. It is free to use, open source, and has been around long enough it can do pretty much anything you’d want it to do:

The library has more than 2500 optimized algorithms, which includes a comprehensive set of both classic and state-of-the-art computer vision and machine learning algorithms. These algorithms can be used to detect and recognize faces, identify objects, classify human actions in videos, track camera movements, track moving objects, extract 3D models of objects, produce 3D point clouds from stereo cameras, stitch images together to produce a high resolution image of an entire scene, find similar images from an image database, remove red eyes from images taken using flash, follow eye movements, recognize scenery and establish markers to overlay it with augmented reality, etc.

It is written in C++ but has official interfaces for Python, Java, MATLAB, and even javascript (sort of) so you can use your favorite language to build your app.

Why would I need OpenCV when PyTorch can do ML on images?

Great question! You are totally right. You can train neural networks to do magical things on images using standard machine learning libraries like PyTorch. But, what OpenCV brings to the table is the ability to deal with images at the pixel level. Being able to identify corners and edges, stitch images together, calibrate colors, apply pixel filters, rotate, shift, or resize is a huge benefit to preprocess before you throw data at a neural network. In fact, these algorithms are so optimized that you can do them in real time to image streams so you can imagine using that to buffer and normalize data while waiting for your neural network to do its thing.

Open CV also does things like object detection, image classification, text detection, depth estimation, and facial recognition (all deep learning topics) straight out of the box and it does these things in a highly performant way. Like, lightning fast.

The End

So, if you want to build a mini self driving Tesla, maybe check out something like OpenCV before spending a ton of time gathering images and trying to train a neural network from scratch.